Distributed James Server benchmark

This document provides benchmark methodology and basic performance of Distributed James as a basis for a James administrator who can test and evaluate if his Distributed James is performing well.

It includes:

-

A sample Distributed James deployment topology

-

Propose benchmark methodology

-

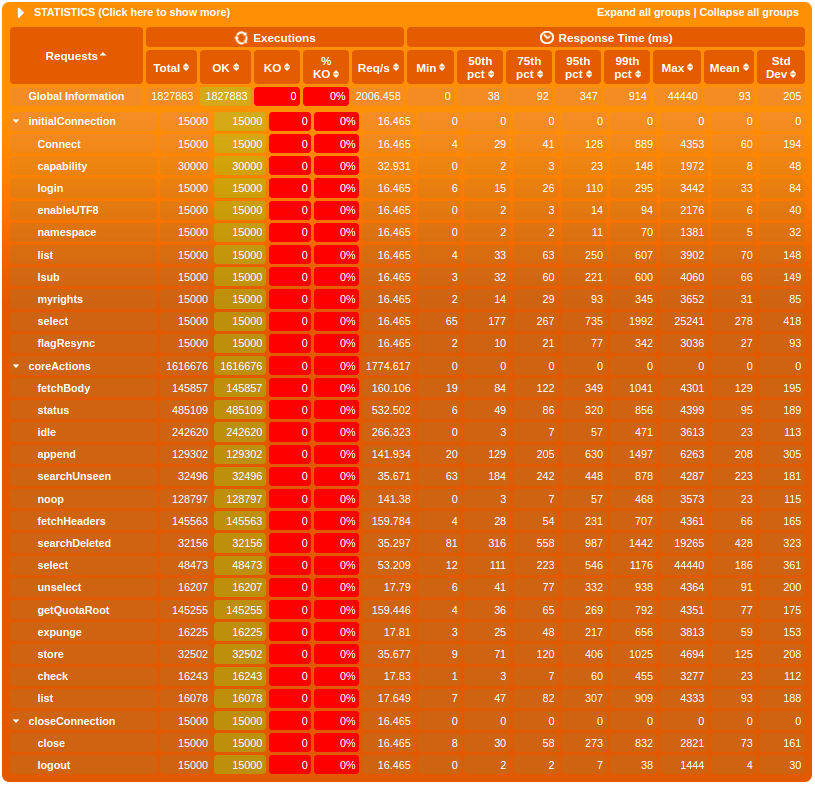

Sample performance results

This aims to help operators quickly identify performance issues.

Sample deployment topology

We deploy a sample topology of Distributed James with these following components:

-

Distributed James: 3 Kubernetes pods, each pod has 2 OVH vCore CPU and 4 GB memory limit.

-

Apache Cassandra 4 as main database: 3 nodes, each node has 8 OVH vCores CPU and 30 GB memory limit (OVH b2-30 instance).

-

OpenDistro 1.13.1 as search engine: 3 nodes, each node has 8 OVH vCores CPU and 30 GB memory limit (OVH b2-30 instance).

-

RabbitMQ 3.8.17 as message queue: 3 Kubernetes pods, each pod has 0.6 OVH vCore CPU and 2 GB memory limit.

-

OVH Swift S3 as an object storage

Benchmark methodology and base performance

Provision testing data

Before doing the performance test, you should make sure you have a Distributed James up and running with some provisioned testing data so that it is representative of reality.

Please follow these steps to provision testing data:

-

Prepare James with a custom

mailetcontainer.xmlhaving Random storing mailet. This help us easily setting a good amount of provisioned emails.

Add this under transport processor

<mailet match="All" class="RandomStoring"/>

-

Modify provision.sh upon your need (number of users, mailboxes, emails to be provisioned).

Currently, this script provisions 10 users, 15 mailboxes and hundreds of emails for example. Normally to make the performance test representative, you should provision thousands of users, thousands of mailboxes and millions of emails.

-

Add the permission to execute the script:

chmod +x provision.sh

-

Install postfix (to get the smtp-source command):

sudo apt-get install postfix

-

Run the provision script:

./provision.sh

After provisioning once, you should remove the Random storing mailet and move on to performance testing phase.

Provide performance testing method

We introduce the tailored James Gatling which bases on Gatling - Load testing framework for performance testing against IMAP/JMAP servers. Other testing method is welcome as long as you feel it is appropriate.

Here are steps to do performance testing with James Gatling:

-

Setup James Gatling with

sbtbuild tool -

Configure the

Configuration.scalato point to your Distributed James IMAP/JMAP server(s). For more configuration details, please read James Gatling Readme. -

Run the performance testing simulation:

$ sbt > gatling:testOnly SIMULATION_FQDN

In there: SIMULATION_FQDN is fully qualified class name of a performance test simulation.

We did provide a lot of simulations in org.apache.james.gatling.simulation path. You can have a look and choose the suitable simulation.

sbt gatling:testOnly org.apache.james.gatling.simulation.imap.PlatformValidationSimulation is a good starting point. Or you can even customize your simulation also!

Some symbolic simulations we often use:

-

IMAP:

org.apache.james.gatling.simulation.imap.PlatformValidationSimulation -

JMAP draft:

org.apache.james.gatling.simulation.jmap.draft.PlatformValidationSimulation -

JMAP rfc8621:

org.apache.james.gatling.simulation.jmap.rfc8621.PushPlatformValidationSimulation